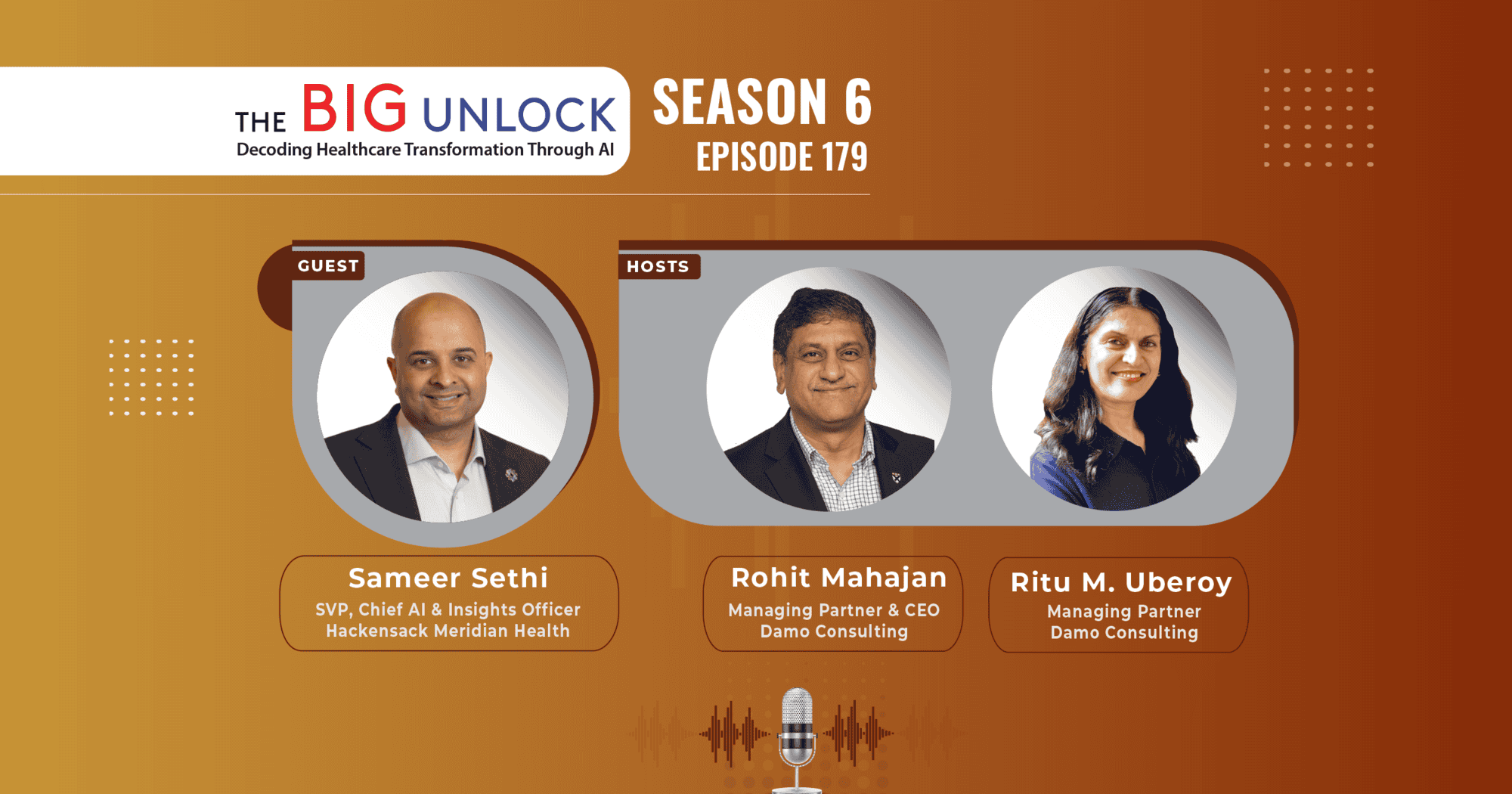

Ritu: Hi Sameer. Welcome to our podcast, The Big Unlock, season six. My name is Tum Roy, and I’m the Managing Partner at Damo and BigRio, based out of Gurugram, India. Always a pleasure to chat with CMIOs, CIOs, CDOs, and Chief Information and AI Officers about all the latest developments in healthcare systems. I hope this will be a really exciting and engaging conversation. Welcome to the podcast once again.

Rohit: Thank you, Sameer. Welcome to the podcast. I’m Rohit Mahajan, Managing Partner and CEO at BigRio. Over to you.

Sameer: Thanks for having me, folks. Excited about this conversation.

I’m Sameer Sethi. I function as the AI and Insights Officer for Hackensack Meridian Health. We are a health system based out of New Jersey, a combination of 18 hospitals and close to 500 care sites. We do this with the help of around 40,000 team members, both clinicians and non-clinicians. Our care is mostly across New Jersey, and we also have a very big digital presence.

My focus within the organization is primarily on four areas. One is data and analytics. This is where we produce and ingest data from various source systems, with our electronic medical record being the biggest one. My team helps normalize that data and make it available not just as raw data, but also as insights.

The second is AI. This involves moving beyond explaining what has happened to understanding why it happened and predicting what may happen next. Those capabilities are now more easily available to us through third-party models. Building capabilities that leverage large language models and generative AI is a big piece of our portfolio as well.

The third is robotic process automation. This is where we write code to emulate human functions where appropriate. We have a large program that focuses on automating mundane tasks or tasks that require accuracy that machines can deliver better, or speed that humans cannot match. We now have over 200 automations in production today—a really large portfolio we’ve been building for quite some time.

And the fourth is software development. At times, when purchasing software or capabilities is not viable for Hackensack—either because it’s not what we need, it’s too expensive, or it’s not available—we will write software and deliver it to our clinicians and operators.

So that’s what I do for Hackensack. I’ve been here for three and a half years, and it’s been a really rewarding experience.

Ritu: Thank you for that introduction, Sameer. Lots to talk about. We were very interested to hear how you think AI is not just for the sake of AI, but about putting it to the right use. We would love to hear about some applications at Hackensack that you’ve built where you really feel AI has demonstrated transformational change and made the patient experience better.

Sameer: Absolutely. We’ve been excited about this. We’ve been at it for almost three years now, with a very heavy focus on AI in the last two years. Three years ago, the conversation on AI was more about machine learning. Now it’s more about generative AI, and the talk track behind why this is so important has changed as well.

At Hackensack, because we are so serious about this, we’ve really thought about how to put AI to use. This goes beyond just talking about how AI is good and the governance around it. While we do that, we are also focused on building the right solutions. There’s one thing to look at tools like ChatGPT and say you can ask a question and get an answer back. But the real value is in embedding these technologies into workflows. That’s what we are most focused on.

Giving someone access to a conversational AI—whether by voice or typing—gets you to a certain point. But the real impact is in customizing it for a clinician at the time of care. That’s where Hackensack has put its efforts.

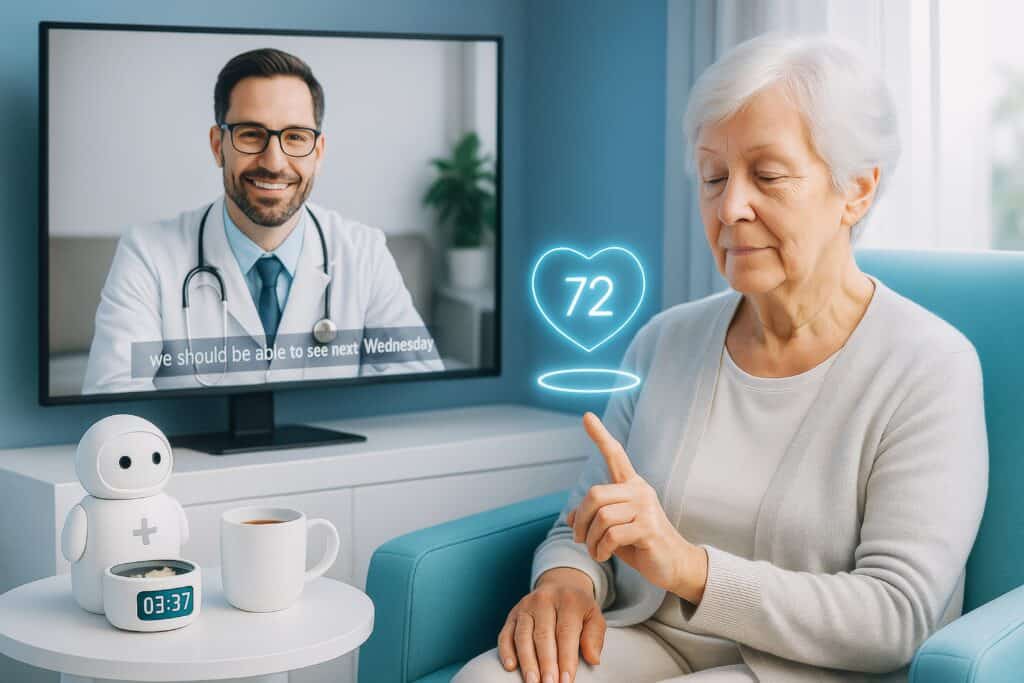

A good example: as a hospital system, our clinicians see patients, and when they do, they face a sea of valuable data. Reviewing that data takes time—time they often don’t have, or time taken away from the patient. One of our first use cases when large language models became available was to use generative AI to summarize that data. We essentially wanted to improve the experience.

We’re all consumers of healthcare. I’m sure you’ve been in situations where you go to a doctor’s office and the doctor is staring at the screen while trying to listen to you. What that clinician is doing is trying to understand your clinical record, which takes time. We made that easier. We provided a button for clinicians to summarize a patient’s information instantly—in seconds.

That use case has evolved. Initially, the summaries were general. But through feedback we realized summaries had to be by specialty. An oncologist, for example, needs different details compared to a primary care physician. So, we started with eight specialties and today it’s almost 15, giving physicians the option to summarize by specialty. That has been very valuable.

That’s one way we’ve used generative AI technology and put it to good use. This continues to grow. For example, we’ve also applied it to patient survey data. We collect survey information from patients and families post-discharge. The structured data was already in dashboards, but we realized patients were typing a lot of comments.

So, we created sentiment analysis on top of that unstructured data and gave it back to the business at a unit level. This wasn’t happening before. Operators can only read so many comments. By synthesizing comments in volume, we can surface trends across unstructured data. Again, we used large language models to deliver those insights back to the business.

This is an important part of the conversation. When we talk about AI and how to put it to use, there’s the aspect of finding the right use cases, but there’s also a technical side. There’s a lot of discussion around tools like ChatGPT not giving the right answers. At Hackensack, we put together a center of excellence that focuses on indexing. Why? Because indexing makes the answers better.

So, at Hackensack we’ve not only been exercising the muscle of finding the right use cases and delivering them, but also learning how to harness the technology and use it better. This technology is here to stay. It’s really good—you just have to know how to put it to the right use.

Ritu: Thank you, Sameer, for sharing those use cases. It’s really incredible that you were able to do clinical note summarization, especially across so many specialties, and provide customized information to physicians. Did you feel there was any pushback? How did you handle the literacy or acceptance part of it? Because some people we talk to feel innovation is difficult for healthcare professionals to accept, and it takes much longer. I’m sure they saw the benefits, but it might have been a steep curve. What was your experience?

Sameer: At Hackensack, this has been a bit of us learning from our mistakes over time. How you build these things is really important. And when I say “how,” I mean the non-technical side.

What we did well was first understanding the problem that needed to be solved, and then partnering with the clinicians who wanted to solve it. When there’s mutual participation, there’s better acceptance. We also set clear expectations from the start—what this technology is, what it isn’t, how accurate it is, and how accurate it isn’t.

For example, with clinical note summarization, our intent was never to replace anyone’s work. Our intent is to help people work at the top of their license. So, we tell clinicians: this isn’t 100% accurate. You still have the whole patient record if you want to review it. If something in the summary doesn’t look right, it’s your obligation to check the full record. But what we’ve given you is a strong head start. That’s what reduces pajama time. That level of transparency has helped us get much better acceptance.

And by the way, my team and I welcome pushback. It teaches us a lot. As technologists, we’re keen to build solutions, but they have to be used by clinicians. When we get pushback, we consider it feedback. Maybe we’re not explaining the solution well, maybe we haven’t designed the right user interface, or maybe we haven’t embedded the capability properly into the workflow. We look at the positives in pushback, and it has worked to our benefit.

Ritu: That reminds me—many years ago I attended a lecture by Marshall Goldsmith where he said, “I don’t know why people call it feedback. It should be called feed-forward.” Because anytime you get feedback, it’s actually helping you move forward. I really love that line and share it with so many people.

Sameer: We actually make a very strong attempt to build feedback—or feed-forward—mechanisms into almost everything we deliver. Not capturing that feedback over time results in lack of adoption.

Ritu: Yeah, because you start drifting away from what people actually need.

Sameer: Exactly. Even a basic thumbs up or thumbs down is valuable. A thumbs down signals us to go back to the user and ask, “What happened here?” We try not to complicate feedback collection, but we always make sure to build feedback loops into our solutions.

Ritu: So, you’re saying one of the keystones of AI adoption at Hackensack is bringing everybody along for the ride and ensuring buy-in from all stakeholders.

Sameer: You know, I also want to add something valuable to this conversation—we’ve thought a lot about governance. I think all of us have, but it’s still such a word out there and people don’t always know what it really means. So I’ll tell you our journey.

We call it our governance pyramid. At the bottom is the technical tier, which is our team evaluating and building AI. On top of that, and more important in this context, is what we call our AI and Automation Governance Group. This is made up of 13 domains across the health system coming together. When we built our governance process, we invited representation from every domain—legal, HR, clinical documentation, finance, and others—so that every voice had a seat at the table. That inclusion really helped us.

Then we have the next level up, which is our governance committee, primarily the executive leadership team. These are the folks that help us prioritize, because everything can look good, but we can’t do everything. So the committee decides which problems to solve first and which areas deserve focus. That model has been very helpful in keeping us on track.

Our board has also played a very large role, and sitting on top of all this we had our annual summit last year. As you can imagine, when we started doing AI—and especially when generative AI came in—there was a rush of demands from the business. Everyone was saying, “I hear this is available, do this,” or “This looks really cool, let’s try it.” At a certain point, I honestly didn’t know what direction to look in.

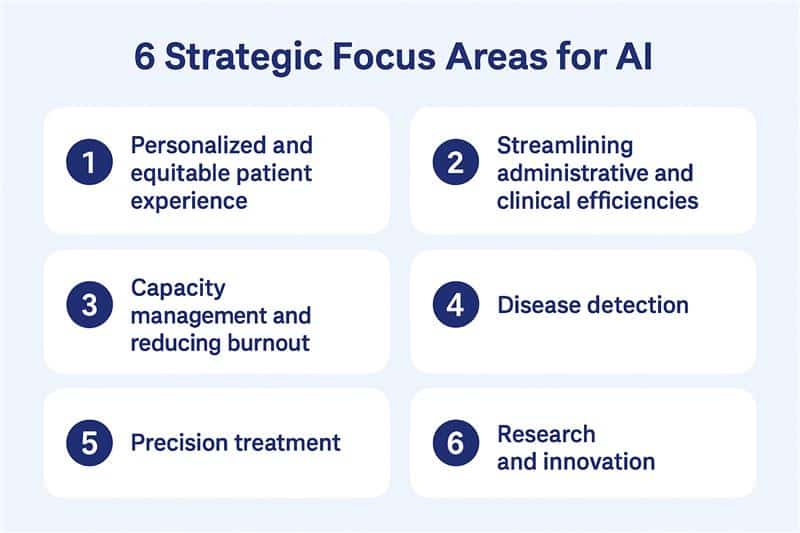

So we went to the board and established six areas of focus for Hackensack. The reason we did this was because we knew if we didn’t, we’d end up chasing shiny objects. With the help of the board and our executive leadership team, we identified six strategic areas, and the principle was simple: if a use case didn’t fall into these areas, we wouldn’t do it.

The six areas are creating personalized and equitable patient experiences, streamlining administrative and clinical efficiencies, capacity management and reducing burnout, disease detection, precision treatment by customizing therapies for patients, and research and innovation. These are broad enough that most initiatives can align, but focused enough to give us discipline.

Every use case that comes to the governance committee now goes through a scoring process, which looks at ROI and how many of these buckets it fits into. The more it aligns with our focus areas, the higher its score, and that’s what determines where we put our efforts. That process has been very valuable for us and has helped us stay focused on the right problems.

Ritu: So, most of the CIOs we talk to say that they’re concentrating more on the digital front door and the patient experience. They want to stay away from disease detection and anything that requires like FDA approval. I’m curious, when you talk about disease detection, what are some of the use cases there and how do you get around that?

Because. Exactly the problems that you mentioned earlier with LLMs still having hallucinations and other things, and a human in the loop having to review all the results. So, for disease detection, how do solve that.

Sameer: That’s a great question, and I think you’re asking it with generative AI in mind, which makes sense because that’s where most of the buzz is right now. But the reality is that disease detection is much more a machine learning use case than a GenAI one. With machine learning, you don’t really have hallucinations, or they’re almost nonexistent. And that’s an important distinction. Too often people hear “AI” today and immediately think only of GenAI, when in fact, there’s so much more to it.

What’s interesting is that when we had our last board summit, we actually ran a voting exercise across the six areas of focus we had defined. And surprisingly, disease detection came out on top. Right after that was streamlining administrative efficiencies and addressing burnout—clinicians, as you can imagine, pushed for burnout relief, and operators pushed for efficiency. But overall, the board said disease detection should be front and center.

And honestly, it makes sense. We’re a hospital system. If we can detect disease earlier, we can keep patients healthier. That’s the whole point of what we do.

Let me share a couple of examples. The first is mortality detection. This one is personal for me. A few years ago, I lost my mother-in-law, and I’ll never forget the palliative care team telling us, “We should have been here six months ago.” I remember sitting with the physician and asking, “What happened? Why didn’t anyone flag this earlier?” And the honest answer was, they’re not wired that way. Physicians and nurses are trained to keep fighting, to keep trying to fix the patient. Letting go and saying, “It may be time for palliative care,” doesn’t come naturally.

So what we did was build a model that produces a mortality score. And then we embedded it directly into the clinical workflow. Here’s how it works: a clinician might go into the patient record to prescribe something simple, like Tylenol. At that moment, a best practice alert pops up and says, “The model predicts a high likelihood of mortality within six months. Consider palliative care.” The clinician can review the labs, the patient history, and if they agree, they click “yes.” That automatically creates an order for the palliative care team to engage. If they don’t agree, they hit “no” and provide feedback, which we use to improve the model.

So what we’ve created is a chain: a mortality score that becomes a nudge, that then becomes an order—all with the clinician still in the driver’s seat. And the result is that patients are now being referred to palliative care much sooner. They likely would have ended up there eventually, but now families are getting the support earlier, which makes a huge difference.

Another example is chronic kidney disease. We’ve built capabilities that allow us to detect CKD much earlier in the progression of the disease, and that early identification can dramatically improve patient outcomes. And right now, we’re also working on chronic asthma detection.

So those are just a few of the use cases, but the bigger point is this: disease detection is core to us as a health system. It’s not about replacing clinical judgment. It’s about surfacing signals—signals that help our clinicians make the right calls, earlier, and give patients better care.

Ritu: That’s really interesting because we haven’t heard that from other hospitals, so, that’s really incredible to hear.

Sameer: You know, one thing I always want to emphasize is that what we’re building are not decision tools—they’re decision support tools. There’s a big difference. The final call always rests with the clinician. Take the mortality model I mentioned earlier: yes, it provides a nudge, but alongside the “yes” button, there’s always a “no” button.

Ritu: You’re alerting them, but the decision is still theirs.

Sameer: Exactly. And when they press “no,” they’re asked to provide a reason. That feedback is incredibly valuable to us. It helps us refine the model, learn where it might not align with clinical judgment, and continue improving it. But at the end of the day, the conversation with the patient, the clinical decisions—that all stays firmly in the hands of the physician. What we’re doing is simply surfacing insights at the right moment, in a way that makes patient care better.

Ritu: I think this has been a really insightful part of the conversation.

Thank you, Sameer, for sharing those use cases for disease detection. Looking forward, you know, if you looked at the next six months or a year or maybe two years, what do you think the top three trends in AI would be? You know, I know we talked a little bit about agents in our prep call yesterday, and uh, you had like a very good story about agents as well, where you said that agents are, you know, more, mostly an orchestration platform.

So, if you would like to talk a little bit about that, share what you think the next three big trends are going to be. Or not trends, but like things which are going to, you know, come to the front line and be a focus area of focus for Hackensack.

Sameer: I’ll tell you my top three are going to be agents, agents, and agents. Agents. This is an opportunity for us to use technology that is here now to orchestrate. For me, when I think about agents, it’s a concept. The concept is: how do you orchestrate different technologies to work together and provide an output that a human can interact with better? That’s an agent.

In the past, folks like me have tried to build these ourselves, similar to how we built machine learning models. Today, those models are available—now they are machine learning models versus large language models, but just using that as a concept. What I’m trying to portray is that we’ve been trying to build these agents for a long while, but the tech had to be developed from scratch. Orchestrating RPA software and a database to all work together in a certain sequence wasn’t easy to do. We did it, and today, technology companies like UiPath, Google, and others have built platforms to orchestrate that.

Now the orchestration has become a lot easier. Why is orchestration important, especially in healthcare? Because we are now not just relying on humans to do certain things. In the past, we built AI and gave it to a human. Rightfully so, in some situations, a human has to interact with it, like the mortality example. But there are instances where we’re building AI signals that do not require a human. The next step can still be taken by a machine.

In the past, when building these signals, we would rely on a human to interact with it and do something, even when the human wasn’t needed.

That doesn’t hold true for all use cases and applications. In certain places you still need humans, but that’s what I’m most excited about. In places where I don’t need a human, how do I build and take it to the next step? Because then those insights will be used, rather than relying on a human who may not have time, or the program lacks buy-in. What I’m most excited about is how do I take those instances and use all these insights more effectively. Now you can move to the next step without relying on a human. I think that is the evolution of AI, and that’s why I choose this as my top three focus areas.

Now look at the portfolio I’m privileged to own: RPA and AI and software. Now the world has given me a platform where I can plug all this together. I’ll use an example: as a health system, we receive a lot of denials from insurance companies.

Rohit: Mm-hmm.

Sameer: We rely on humans to take that denial, synthesize it, get information, then generate a new appeals letter and send it. That’s a process—requires expertise, humans, and time. The impact is that getting this together delays the cash flow until the insurance pays. What we’re building is an agent that will do most of that work: receiving a denial letter, extracting information about the denial (maybe a missing modifier or pre-authorization), then using a large language model to synthesize that information.

Then it taps into a rule-based engine we built: if the denial reason and modifier meet a certain rule, do this. I’m oversimplifying, but it relies on the rule-based engine to identify the opportunity and the deficiency in the previous claim or submission. Then it creates a new claim or appeal, using generative AI. RPA pushes it to a human who reviews, approves, and then it’s processed again with the insurance company. This was all done by humans previously. Now, orchestration platforms can switch between a large language model, a rule-based engine, generative AI, and RPA—moving all these pieces together. That saves time and a lot of money. Humans are still involved toward the end, reviewing, but the things we didn’t need humans for are now done by machines.

Ritu: Amazing. Great to hear that, Sameer. Rohit, do you have any last questions for Sameer?

Rohit: Y Sameer has given a lot of food for thought. I’ll be taking exhaustive notes. I have enough questions for another complete podcast, but what I’d like to ask you—and I’m very interested in the center of excellence on the data indexing part, so I’ll come back to you on that as well—but could you share with listeners what motivated you to get into healthcare, how you started, and your story? That would be great to end the podcast with.

Sameer: That’s a very good question. I love sharing this story. This goes back almost 15, 16 years. I worked in financial services. I married Simon, she’s an occupational therapist. She works as hard as I do, if not more. I still remember her coming home after we got married in 2006. I was at Deloitte, not in healthcare then, and she said, “I see you on the computer all day. You do great work and provide for the family, but I want to share some feedback: I made someone walk today. What did you do?” That triggered something in me. I wasn’t much of a charitable person, but I thought—maybe I can do something that saves a life or saves money and care. I looked into different things and eventually, I landed in analytics.

That’s what motivated me—I wanted a job and career where I could make an impact without going back to school to become a doctor or clinician. This is my calling, where my team’s hard work hopefully helps someone live better, or maybe, as in the example, die better. For me, it’s a very passionate thing. I tell people I came into healthcare and am never leaving, just for those reasons. Whenever I think about an opportunity beyond healthcare, I hear my wife saying, “I made someone walk—what did you do?”

Rohit: That’s an amazing story, Sameer. There is a saying at Harvard Business School about the benefit of a physical footprint, but what about an intellectual footprint that can span the world? You have so many great ideas that can be propagated. I’ll definitely be back in touch with you on that. Thank you so much.

Sameer: What’s most exciting for folks like me is that, first of all, the data is there. There’s so much data being generated now—from our watches, phones, doctors—it’s all available. Second, we’re not alone anymore. I remember the days when the only model you had was one you built yourself. Now, Google and OpenAI—everyone has a model. There’s so much opportunity between available data and capabilities. The third thing is willingness—consumer willingness. I have a relative I help with doctor visits. Pre-COVID, she always wanted to visit her doctor in person. Post-COVID, she asks, “Can I take a telemedicine appointment?” It’s a big change.

Consumers are open to digital means of delivery now. Technology will do well, though healthcare will always be a very people-centric business. But I’m excited—the data is there, capabilities are there, and consumers are ready.

————

Subscribe to our podcast series at www.thebigunlock.com and write us at [email protected]

Disclaimer: This Q&A has been derived from the podcast transcript and has been edited for readability and clarity.